Intelligence failures and a theory of change for forecasting

Better forecasting might reduce the risk of nuclear war

In this blog post I’m going to try to do two things. First I’m going to make the case that failures of both the US and Soviet intelligence services have substantially increased the risk of nuclear power in the atomic age, then I’m going to argue that these case studies provide potentially the best example of how Tetlock style forecasting can be used to improve the world.

Forecasting

Tetlock style forecasting is the practice of individuals or, even better, groups of individuals and having them predict the probability of events on scale from 0-1 and then scoring them on their predictions. Tetlock’s experiments have shown that some people are much much much better both than average individuals, domain experts, and relevant for this, CIA intelligence analysts with access to classified material, at predicting political events. However, as with any improvement in human power, it’s an open question about whether this tool is more likely to be used for good or ill. For instance, one could imagine authoritarian regimes using these methods to more effectively suppress protests. Furthermore, it’s not clear how many tragedies could have been averted if the revlevent actors had had access to higher quality information. For instance, the North Korean economy isn’t failing because President Kim is incorrect about whether or not his economic policies are good - the economy is failing because Kim uses the policies that are expedient to him staying in power for the longest period of time possible. If one was trying to improve the long term future as much as possible it’s not clear that developing and advocating for forecasting is better than reducing the burden of disease in low income countries which both have enormous benefits and we can be very confident that we do effectively.

However, I think the history of nuclear risk provides good case studies in how having better information would have reduced nuclear risk, and I’ll also sketch theoretical reasons for why one should believe that this is true.

A short history of intelligence failures

I think the role of intelligence failures during the cold war phase of the nuclear era can roughly be divided into 3 themes:

Incorrect estimates about the other sides strength

Poor understanding of the intentions of the other side

The early success of espionage and failure of counter-espionage

One recurring motif throughout the cold war was US intelligence systematically overestimating the hostility of new leaders. The three examples that really stand out in a nuclear context are the initial deep misunderstanding that the US had of Nikita Krhushchev, Mikhail Gorbachev and Fidel Castro. In all three cases US intelligence thought that they were much more adverse to US interests than they in fact were. The worst case of this is with Castro. Castro probably wasn’t especially committed to Socialism when he first gained power - he was primarily opposed to the Baptista regime and initially approached the US as an ally similar to what Ho Chi Minh would do later. US intelligence communities' misunderstanding of Castro’s intentions would mean that US politicians would push Castro into Soviet hands. The Cuban missile crisis would follow from this.

The failures to understand the intentions of Khrushchev and Gorbachev were probably less impactful. US intelligence thought that Khrushchev was a leader in a similar mould to Stalin. It’s unclear if the detente in 1953 was ever a possibility but the aggressive US stance that was adopted towards the Soviets probably lowered the probability that reprochment would be reached in the early days of Khrushchev’s rule. A similar mistake was made over Gorbachev. In this case initial American antipathy didn’t mean that the window for an agreement to reduce the size of the US and Soviet nuclear arsenals, and a general ending of the cold war, didn’t close, but it probably delayed the former by a year or so.

The US intelligence community also failed to see that it was in fact the US that had the large advantage in nuclear missiles and launch systems during the 50s and early 60s. Specifically the US intelligence community essentially believed Khrushchev when he, falsely, said that the USSR was “producing missiles like sausages.” In fact by 1960 the USSR only had 12 ICMBs that used solid fuel to launch meaning that it would take them 8 hours to be launched and thus were vulnerable to a first strike. The effect of this was that the US engaged in a massive nuclear build of ICMBs that meant by 1962 it had about 10 times as many ICMBs as the USSR. This has two effects that conspired to increase the risk of existential catastrophe from nuclear war. Firstly, it just meant that there were just more nuclear weapons meaning more people would die in a nuclear war. This probably didn’t just mean that the USSR had more nuclear weapons - it also meant that the USSR would build more missiles to try to match the US. Secondly, it contributed to the Soviet decision to place missiles in Cuba. By placing missiles in Cuba the USSR potentially was able to counteract the American nuclear superiority as it could effectively match long range missiles with intermediate range missiles launchable from Cuba. I think it’s very unclear how much these considerations mattered for the decision to put missiles in Cuba - the missiles could still be hit with a first strike including by conventional forces so it’s very unclear to me if they had any strategic value but it seems plausible that Soviet leaders thought they had strategic value.

Early on in the cold war intelligence played a very important role because the Soviets were able to steal much of the technical knowledge needed to build nuclear weapons. This sort of intelligence failure seems unlikely to be fixed by forecasting - one could perhaps imagine internal forecasting in intelligence agencies applied to the question of “who’s an enemy spy” but this seems a more speculative application. Soviet intelligence gathering continued throughout the cold war and in general sped up the Soviet nuclear program and technology more generally. Eventually the US found out about Soviet spying and started feeding actively damaging information to Soviet spies.

A theory of change for forecasting

I think it’s instructive here to think about the more general reasons why we have wars. In general, wars are very costly. It’d be much better for both sides if they were able to agree on who would win the war and for both sides to sign the peace treaty before the war even started. We see this very commonly in nature - think of gorillas thumping their chests, trying to show that they’re stronger, so the other one backs down.We’ve also seen this in the history of nuclear standoffs. For instance, with the US and USSR “eyeball to eyeball” over West Berlin in 1961, the USSR backed down because the US revealed that they knew about their massive advantage in ICBMs.

There are 8 reasons why countries might go to war rather than agree to a peace treaty.

Countries bluffing about being stronger than they in fact are

One or both countries overestimate their own strength relative to their adversaries

Preemptive strike

Inability to credibly commit to the peace treaty

War as an intrinsic good - often this is because of revenge

Carrying out a threat

Indivisible goods

War is actually not that costly for the decision makers

Bargaining failure

Forecasting has the potential to significantly reduce risks from bluffing, incorrect estimates of relative military strength and incorrectly anticipating a preemptive strike. The history of intelligence failures shows that all of these were key contributors to some of the most dangerous moments in human history.

Bluffing and incorrect estimates

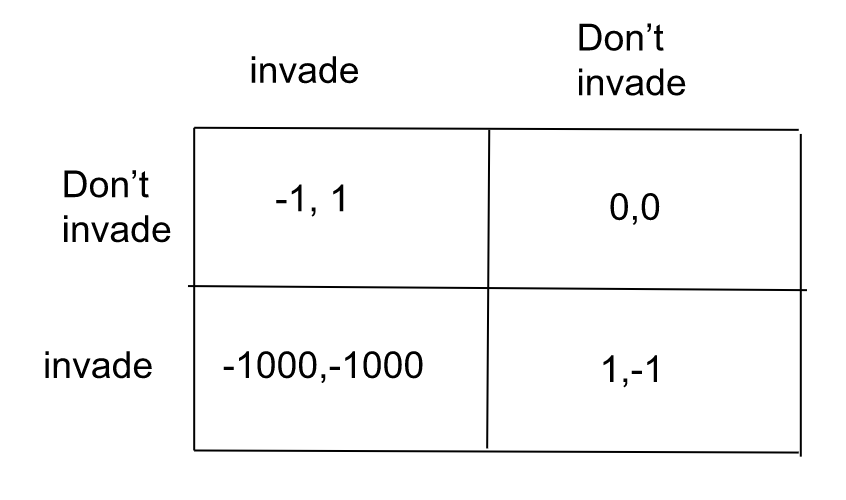

Two countries are in a military conflict. There are two possible types they could be, a weak type and strong type. In a nuclear weapons context, this corresponds very well to having a survivable second strike capability, or having a first strike sufficient to destroy the vast majority of your adversaries' nuclear forces. In a nuclear standoff, if two strong types or two weak types are facing each other, neither side wants to be in a position where they enter an armed conflict with another because of the risk of war. For the two weak types this is maximally fraught because, in this particular model, being a weak type corresponds to not having a survivable second strike meaning whoever pushes the button second loses.

In the game above, the Nash equilibria are (Invade, Don’t Invade) and both players playing a mixed strategy in which they play randomise between playing invade and Don’t invade, with the probabilities determined by the ratio between the payoffs. In this game, the probability of invading can be made arbitrarily small with an arbitrarily negative payoff to both players picking invade as their strategy.

However, for a weak type playing a strong type the game matrix is different.

In this game, the strong type will always play invade, while the weak type will always play Don’t invade. Therefore, the weak type has an incentive to bluff that it’s the strong type. Because of the bluff, there’ll be some probability that two strong types both invade and we get nuclear war. The way forecasting changes this is that it makes it much harder to bluff because both players have much more certainty about the capabilities of the other player.

A concrete example of the incorrect estimation of military strength failure mode is the US plan to invade Cuba during the missile crisis. The US believed it was a strong type facing a weak type - i.e that there were a relatively small number of Soviet forces who didn’t have nuclear weapons in Cuba aimed at Guantanamo bay. In fact they were facing an extremely large Soviet force who were armed with nuclear weapons. Converting this into the model I’ve laid out above, the Soviets had already “invaded” Cuba and had the US known that their best response would have been not to invade.

The Soviet position from 1958-1961, culminating in the 1961 Cuban missile crisis is an example of getting uncomfortably close to nuclear war because of bluffing. The Soviet’s claimed to have a huge number of ICBMs that could hit the US mainland. They thought that the US believed their claim and, until 1961, the Americans did. On the basis of thinking that their bluff was successful, the Soviets acted very aggressively over West Berlin, the Capitalist outpost in the Eastern Bloc. In 1961 they attempted to take West Berlin. US and Soviet tanks were 5ms apart, each of their respective sides of the East-West Berlin border. The Soviets only backed down after the US revealed they both knew the true number of Soviet ICBMs and where they were located, making them vulnerable to a US first strike.

In both of these cases, better knowledge could have avoided both of these close calls, and it seems possible that forecasting could be effective at providing better knowledge. In an alternate world, American forecasters could have predicted there was a reasonable probability that Soviet forces had a very large and nuclear capable force, thereby taking any invasion plan off the table. Similarly, they could have put a high probability that the Soviets were bluffing when Khrushchev claimed that the USSR was producing missiles “like sausages.” Preventing the Berlin crisis in that case requires the Americans communicating to the USSR that they knew that they were bluffing which is perhaps unlikely, given that the Americans didn't communicate that fact until deep into the crisis. However, in this alternate world the Soviets may not have bluffed at all if it was established that bluffs were likely to fail because the Soviets knew either explicitly about US forecasters, or enough previous bluffs had failed that they’d inferred that the US had a good way of getting high quality intelligence.

Within the model, the effect of forecasting is that it changes the prior probability that agents use when evaluating the probability that they’re facing type x given they’ve observed action y. As the prior probability tends to 1 the probability that the weak type bluffs in equilibrium tends to 0.

Preemptive strike

The fear of a preemptive strike haunted both the USSR and the US up until just before the end of the cold war. There are three prominent examples of this substantially increasing risk. The first was the Soviet fear that the Able Archer military exercise in 1983 was going to be used as a ruse to give NATO cover to launch a first strike against the Soviet union. In response to this, the Soviet Union created its own plans for a pre-emptive strike. Most concerningly was the interaction between this incorrect fear and the extremely high false-positive rate of early warning systems.

During the Able Archer crisis, Stanislav Petrov saw the Soviet early warning system indicate that 5 US missiles were heading towards the USSR. He was an engineer by training who had worked on the early warning system and knew that it was relatively unreliable, in addition to the fact that the system showed 5 missiles heading towards the USSR. This was odd because the Soviet soldiers had been trained to expect a first strike to be full scale with thousands of missiles, which was the correct description of the US strategy. For those two reasons, Stanislav Petrov didn’t report the warning to his superiors. However, it is easy to imagine that if the early warning system had failed in such a way that many more missiles were reported as incoming, as had happened many times before, that he would have reported it to his superiors. Now, it’s not at all clear that this would have led to a launch - it was commonly known that false-positives were common, and the Soviet system required 3 individuals all to approve a nuclear launch. However, combined with the fact that they were expecting a preemptive strike, it seems definitely possible that the Soviets would have launched their own weapons.

The Able Archer crisis was a result of intelligence failures in three ways. These were the two macro failures: the Soviet belief that the US was planning preemptive strike - an unprovoked preemptive strike was unimaginable to US policy makers in 1983. The second was a failure on the US behalf to realise that the Soviets really did believe that Able Archer was likely to be a cover and not halting the exercise, or giving the Soviets some other assurance that they weren’t planning an attack. Finally, there was the failure of Soviet intelligence on the ground in Nato countries who interpreted completely banal actions as preparations for war which served to enhance Soviet paranoia.

Forecasting can help avoid this failure in a fairly obvious way. Had the Soviet’s employed good forecasting techniques they would have been disabused of the notion that NATO was contemplating a surprise attack.

Bargaining failure

Bargaining failure is one way wars start. Both sides are generally incentivised to reach a bargaining solution rather than go to war. In a bargaining game, neither side will accept an offer that’s less good than their “outside option” - the payoff they’d get if bargaining breaks down. Unfortunately the Myerson-Saithwaite theorem guarantees that if one or both players are uncertain about how the other values their outside option there is some chance that bargaining will break down (at least when one player has all of the “good”.) The intuition behind this is that each player is incentivised to take some risk that the other player doesn’t have good outside options and so will make an offer which has some chance of being rejected. In the context of war, an offer being rejected means that the two states go to war leading to nuclear war. Reducing that uncertainty via forecasting would reduce the likelihood of bargaining failure by reducing the probability that an offer is rejected.

How forecasting could make things worse

We should expect forecasting to, at least sometimes, reduce the perceived level of uncertainty. The main way this could be harmful is via the mismatch between the optimal level of personal risk for the leader of a nuclear powered state, and the optimal level of risk for humanity. A leader may be willing to gamble a 0.1% chance of nuclear war for a very high chance of some political success, especially if they’re personally under threat of being removed from power as is relatively common in authoritarian states. However, for the world this would be very bad. One could imagine exceptions - it could have been reasonable when fighting Nazi Germany - but it seems like in general this would be bad.

A second risk comes from forecasting being brittle in an unexpected way. For instance, if there was a class of international relations questions which a group of forecasters gets systematically wrong, then this could increase risk in the same manner as US intelligence analysis getting some questions wrong increased risk. The reason that this increases risk rather than merely bringing back level to approximately the risk without forecasters, is that it seems likely that a lot of trust will be put into forecasters because, presumably, they’ll develop a good track record. Because forecasting is relatively new and hasn’t been used in practice very much it’s possible that they’ll be an area it’s unexpectedly weak in. This case, the risk of forecasters being wrong in that area won’t be adequately factored in by decision makers, making them overconfident and therefore willing to take risks they otherwise wouldn’t have taken.

Why forecasting might have no effect

I’m not that confident in this section.

It’s possible that forecasting has no effect because by reducing the risk of war countries are incentivised to take more aggressive actions that raise the risk of war again. This is because countries are, in theory, optimising their risk of war against the gains of taking various actions. If the probability that any action leads to war is lower than countries will be incentivised to take more of those actions. This is actually exactly the same theory that predicts that quantitative easing doesn’t work - agents rebalance their portfolios after central banks buy safe assets.

Governments, unlike financial markets, take a long time to rebalance their portfolios. If we reduce the level of nuclear risk it seems very unclear that governments would actually respond to that by taking riskier actions. The history of nuclear policy looks pretty random in lots of ways with political decision makers often exerting pretty limited control over policy. For instance, JFK hated the US nuclear plan as did every subsequent president until it was fixed in the mid 80s.

I think there is some kind of case for these forecasts to not be open to the public - if they are important they could be subverted with lots of new forecasters.

Oh balance I think my argument is probably false, but it isn’t stupid.

This seems pretty reasonable that better info leads to fewer wars when wars are bad for almost everyone almost all the time. It is a pretty convenient conclusion that improving epistemic institutions and creating more knowledge and openness has good effects - I wonder how much we should distrust this because of motivated reasoning type concerns? I at least would find it uncomfortable if promoting ignorance and innacuracy seemed to have the best effects in expectation

Two minor things:

I thought it is customary to present game tables with the options in the same order for both players (and thus the diagonal elements being that both players took the same action, or at least this is what I remember seeing.

Sadly 'reprochment' isn't a word, you are thinking of 'rapprochement', but annoyingly to 'reproach' someone has an almost opposite meaning! English :(